At the start of November, I ran a seminar for a university transmedia course on ARGs, Alternate Reality Games. I was looking specifically at examples of three ARG-like projects had been taken as real, with disturbing effects. This post lists some of the links that I referred to. Another will follow with some thoughts on ARGs.

- An excellent introduction to ARGs is the 50 year’s of Text Adventures series on The Beast, a 2001 ARG written to promote the Spielberg movie AI. This was the first major ARG.

- I referred to a number of ARGs in my introduction. Majestic, from Electronic Arts, came out shortly after the Beast and was an interesting failed experiment. One of the most successful high-profile games was Why So Serious? (2007), promoting the movie The Dark Knight, which included phones hidden inside cakes.

- Although I didn’t refer to it in the session, I loved the Wired magazine interview with Trent Reznor discussing how his band Nine Inch Nails made an album, Year Zero, based around an ARG. I also came across some fascinating references to EDOC Laundry, a clothing line that was also an ARG.

- Perplex City was another successful ARG, run by a team including Adrian Hon. One of the puzzles form Perplex City was only solved after fifteen years. Some excellent background on Hon is in a 2013 interview, Six to Start: Foundation’s Edge.

- It’s interesting how ARGs provoked so much interest, but have not broken through to the mainstream. Alternate Reality Games Could Still Take Over the World (And Your Life) was a good article discussing this. It quotes ARG designer Andrea Phillips: “A lot of energy has [transferred] into lightly interactive web series, room escape games, narratives-in-a-box. Things that use a few of the ARG tools (tangible artifacts, in-story websites, email) but don’t use the full-fledged ARG formula.”

- I discussed the issues around the funding of ARGs, and how difficult that has proved. I also looked at how TV shows like Westworld and Severance have used the ‘mystery box’ concept to build audiences that research and discuss the plots in a similar manner to the communities that investigated ARGs.

- I then moved on to my three examples, the first of which was Ong’s Hat. Gizmodo produced an excellent overview, Ong’s Hat: The Early Internet Conspiracy Game That Got Too Real. The Ong’s Hat story was created by Joseph Matheny, and his story is excellently told in the two-part Information Golem podcast.

- Also worth mentioning is Matheny’s involvement in the John Titor hoax.

- The Slender Man was created for a photoshop challenge, and immediately inspired a number of ARG-like projects. Cat Vincent did an excellent job of summarising Slender Man for Darklore, paying particular attention to the way ARGs contributed to the development of the mythos. Cat also wrote a follow-up article in 2012. I previously used Cat’s research for my talk on ‘Brown Notes’, The Internet Will Destroy Us. Slender Man became a tragic case of ostentation, when two children who were obsessed with the character stabbed a classmate in Wisconsin.

- I then went onto talking about conspiracy theory, using Abbie Richard’s Conspiracy Chart to discuss the movement of conspiracy theories from (what many people saw as) harmless and silly ideas to dangerous (and often anti-semitic) ones.

- I used the ‘Birds Don’t Exist’ hoax as an example of using transmedia to promote a conspiracy theory, as discussed in a NY Times article, Birds Aren’t Real, or Are They? Inside a Gen Z Conspiracy Theory.

- Adrian Hon wrote an insightful article, What ARGs Can Teach Us About QAnon. While careful to draw clear lines between QAnon and ARGs, the piece nevertheless drew interesting parallels.

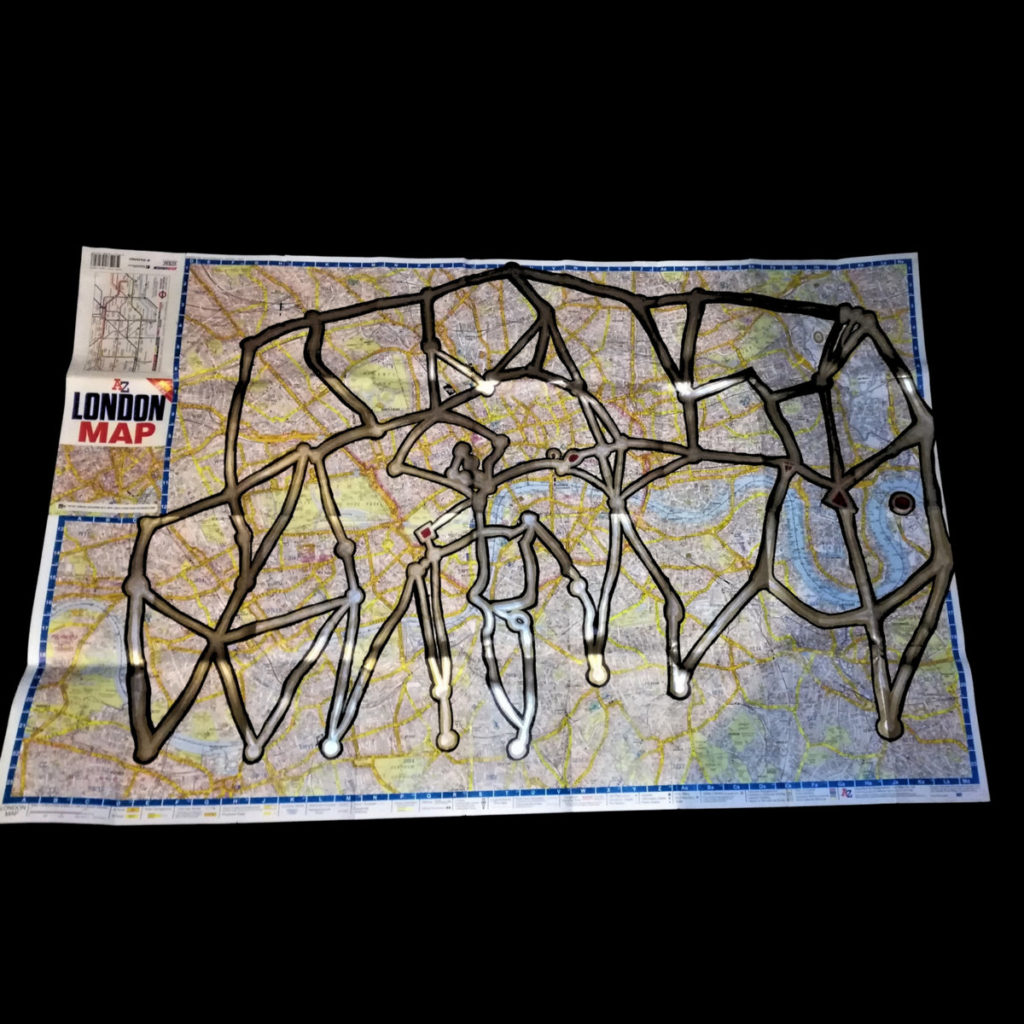

- I then concluded by talking about how ARGs often pretend to be real (‘this is not a game’), and the ways in which the lines between real and imaginary can be played with, particularly given how the media has an insatiable need for stories. I talked a little about Chris Parkinson and the film Tusk, referring a 2014 talk where he encouraged people to “Leave your stories lying around in unorthodox, unethical locations”.